The 2008 UN and partners’ Roots of Resilience report examines resource-dependent communities with an emphasis on ownership, capacity, and connection.

Here is the nut graph, from “World Resources 2008: Roots of Resilience — Growing the Wealth of the Poor,” a joint publication of the United Nations Development Programme, United Nations Environment Programme, World Bank, and World Resources Institute:

In this volume we explore the essential factors behind scaling up environmental income and resilience for the poor. …

Our thesis is that successfully scaling up environmental income for the poor requires three elements: it begins with ownership — a groundwork of good governance that both transfers to the poor real authority over local resources and elicits local demand for better management of these resources. Making good on this demand requires unlocking and enabling local capacity for development — in this case, the capacity of local communities to manage ecosystems competently, carry out ecosystem-based enterprises, and distribute the income from these enterprises fairly. The third element is connection: establishing adaptive networks that connect and nurture nature-based enterprises, giving them the ability to adapt, learn, link to markets, and mature into businesses that can sustain themselves and enter the economic mainstream.

I was thinking about how these lessons might apply closer to home. One approach would be to examine the role of community-based management on U.S. lands and waters.

A promising example on West Hawaii is described in the 2009 paper, “Hawaiian Islands Marine Ecosystem Case Study: Ecosystem- and Community-Based Management in Hawaii,” by Brian Tissot, William Walsh, and Mark Hixon:

In response to long-term pressure from Hawaiian communities to promote local co-management of marine resources, the Hawaii legislature passed the Community-Based Subsistence Fishing Area (CBSFA) Act in 1994 (Minerbi, 1999). This law established a legal process whereby DLNR [the State’s Department of Land and Natural Resources] could designate areas as CBSFAs to allow local communities to assist in the development of enforcement regulations and procedures and fishery management plans that incorporate traditional knowledge.

These communities contain a high proportion of native Hawaiians and are generally organized around traditional Hawaiian ahupua‘a, or former geopolitical land divisions located within individual watersheds (Friedlander et al., 2002; Tissot, 2005). Since 1995, three such areas have been designated as CBSFAs in Hawaii. …

The West Hawaii community has a long history of collaboration regarding resource conflicts, primarily concerning the aquarium fishery, which extends back into the late 1980s (Walsh, 2000; Maurin & Peck, 2008). … Synergy among these organizations, along with high community involvement and support, eventually created a critical mass for effective co-management through Act 306 of the Hawaii State Legislature in 1998 (Hawaii Revised Statutes 188F). …

The specific mandates of Act 306 required: (1) substantive involvement of the community in resource management decisions; (2) designation of ≥30% of coastal waters as “Fishery Replenishment Areas” (FRAs) where aquarium fish collecting is prohibited; (3) establishment of a portion of the FRAs as marine reserves, or no-take areas, where fishing is prohibited; (4) evaluation of the effectiveness of these FRAs after 5 years. …

At the time of the initial five-year evaluation of the FRA network, seven of the ten most heavily collected species (representing 94% of all collected fish) had increased in overall density (Walsh et al., 2004).

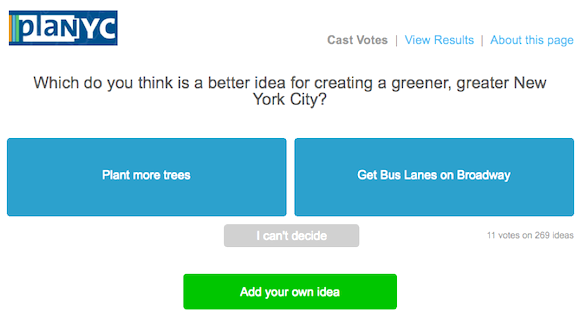

“As you can see, this is basically kitten war for ideas,” said Princeton sociologist

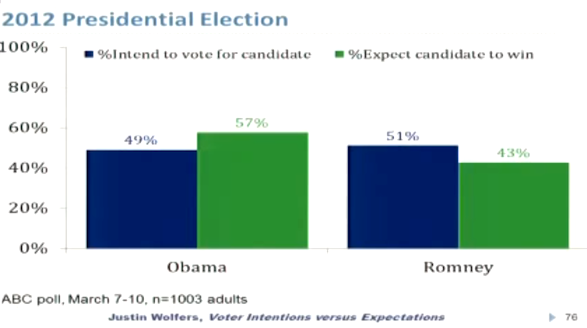

“As you can see, this is basically kitten war for ideas,” said Princeton sociologist  “It could be in November that this is going to be a chart that is going to come back and haunt me,” said

“It could be in November that this is going to be a chart that is going to come back and haunt me,” said